Before we begin

In this post, which examines the American zeitgeist, I adopt a tone of playful criticism. Please don’t mistake me for one of those tedious killjoys who only finds fault. Believe me, I am very grateful for my country and its people.

Introduction

As everybody knows, Google Autocomplete is a function of Google Search that starts providing feedback even before you’ve finished typing your query. The search engine predicts what you’re looking for based on what others have searched for. As such, it’s a good indicator of where people’s heads are. In a previous post I asked Google a range of questions designed to examine the national zeitgeist as of March 31, 2018. Today, exactly four years later, I googled the same questions to see how things have changed and what people seek answers for in 2022.

(Note: to improve the accuracy of this experiment—that is, to make it more about the people instead of about me—I turned off “Personal Results” so my own previous searches won’t influence the Autocomplete suggestions.)

Persecution mania

Four years ago, the query “is it illegal to…” prompted Autocomplete suggestions that included “to burn the US flag,” “to drive without shoes,” “to change lanes in an intersection,” “to burn money,” and “to run away.” Today, this same short phrase produces the following top Autocomplete suggestions:

- Is it illegal to drive barefoot

- Is it illegal to collect rainwater

- Is it illegal to work weekends in France

- Is it illegal to hit a girl

- Is it illegal to burn money

Compared to four years ago, it looks like Americans still hate wearing shoes; are perhaps more patriotic, being a) no longer interested in flag-burning, and b) presumably indignant about the lazy French; are apparently clueless about the most basic rules of law and human decency as regards assault; and (the current stock market nosedive notwithstanding) still feel uncomfortable with their own wealth (or have become inured to losing their money). It’s bizarre that anybody might assume collecting rainwater could be illegal … I mean, we’re talking about something that literally falls from the sky! And how on earth would enforcing such a law be arranged? Cops peering over your backyard fence looking for buckets?

Tweaking the query to “Is it against the law” produces just a few changes. Driving barefoot is still the first suggestion, but then we get:

- Is it against the law to dumpster dive

- Is it against the law to record someone

- Is it against the law to slap someone

- Is it against the law to threaten someone

- Is it against the law to hit a woman

How strange that violence against a female is something so commonly searched on. I mean, why would this be a different question than hitting, slapping, or threatening anyone? It’s hard to speculate what was different in 2018....

Moving on to “can you be arrested for,” this yields almost totally different results than “against the law” (the only common suggestion being “for slapping someone,” which perhaps stems from the recent Oscar dustup and the subsequent endless analysis of whether slapping can be assault). Here are the other top suggestions:

- Can you be arrested for speeding

- Can you be arrested for driving without a license

- Can you be arrested for a misdemeanor

- Can you be arrested for trespassing

- Can you be arrested for not paying child support

It looks like the modern American profile is starting to take shape: these are people who possibly can’t afford shoes, are forsaking pricey bottled water for local rainwater, may engage in dumpster diving, can’t afford child support, have no interest in safe driving, and are quite ready to commit violence if they think they can get away with it. Not a pretty picture.

Now, given all the national attention on law enforcement after the George Floyd murder, I was eager to see what Autocomplete would suggest for the next category, “can a police officer…” vs. last time around. In both cases the top five included “take your car” and “lie to you.” This year we have a few new suggestions:

- Can a police officer serve court papers

- Can a police officer run your plates

- Can a police officer transfer to another state

- Can a police officer question a minor

- Can a police officer dismiss a ticket

The “transfer to another state” would seem to be a question asked by an officer; maybe some of them don’t like the increasing accountability their communities have demanded. As for serving court papers, running plates, and dismissing tickets, these might just reflect the popularity of current police procedural TV shows. That leaves questioning a minor; I can’t see why this would be a problem, but the cop should expect to have the interview recorded by at least a few smartphones so he’d better behave.

Last time around, three out of the top five suggestions for “are you allowed to” involved smoking weed. This time, none of the suggestions involve that; I guess people have forgotten that weed was ever illegal, and now just assume that half the citizenry is stoned off their gourds at any given moment, and nobody cares. Here are the top five Autocomplete suggestions right now:

- Are you allowed to retire at age 50

- Are you allowed to curse at the Oscars

- Are you allowed to carry a knife in California

- Are you allowed to fight in hockey

- Are you allowed to leave North Korea

Seems kind of remarkable that there are people who are presumably financially capable of retiring but who nonetheless have no idea how the world works. It could be they're just dreaming, or maybe they want another reason to be angry at wealthy people. As for the knife inquiry and the fighting in hockey, I guess that just reinforces the portrait of a populace ready to beat someone’s ass.

Actually, I’ve often wondered why it’s okay for hockey players to fight, without being subject to the normal confines of the law. Think about it: if two players get in an all-out brawl on the ice, they get five minutes or so in the penalty box, but if they have their fight in the locker room, or out in the parking lot, or at a bar later, that would be assault and they could be arrested. It’s like the rules of the game supersede the law, like the NHL is the higher authority. I discussed this with my daughter today and she wasn’t quite sure what I was getting at. “Let’s take it a step further,” I said, “and think of one player killing another, for which he’s merely ejected from the game.” My daughter put in that this would surely increase the popularity of hockey. I decided more research was necessary. This article quotes NHL Commissioner Gary Bettman as saying fighting is “a thermostat for the game,” and that it “may prevent other injuries.” There wasn’t much detail provided on this, so I turned to YouTube for more insight. My daughter and I watched “Top 10 NHL fights of all time” and though we didn’t get a lot of answers, it was a pretty amazing spectacle … our hearts were racing by the end. Of particular note is that the announcers never passed judgment, like everyone saw with Will Smith’s slap at the Oscars. On the contrary, hockey commentators announce a fight as though it’s a boxing match (“ … and Smith comes in with a strong left!”). The cheering of the fans, and even the music playing in the stadium, continue unabated even with some of these fights lasting for several minutes. Anyway, I don’t know where I’m going with all this, but sometimes that’s just how blogging goes.

Who what where why how

Now on to the larger, more existential matters. Concerning the “what” questions, four years ago Americans sought information about Palm Sunday, Bitcoin, their IP addresses, rambler, and net neutrality. Today, the only one of these questions still puzzling people is “what is my IP,” which is pretty silly because these addresses are assigned dynamically via DHCP, so they’re practically the opposite of a fingerprint (i.e., they mean nothing). Here are the other top inquiries:

- What is alopecia

- What is an nft

- What is wordle

- What is a viscount

- What is lynching

The alopecia inquiry pertains to the ailment, suffered by Jada Pinkett Smith, that caused her baldness, which led to the Chris Rock joke that in turn led to the legendary slap by Will Smith. I guess Oscar fans are trying to educate themselves so they know what side to take when they start in on their trolling. With that in mind, along with the cringe-inducing, long-overdue inquiry “what is lynching,” I have to wonder if there’s any point to the human race.

As for NFT (i.e., non-fungible tokens), that’s arguably a variation on the theme of cryptocurrency, so nothing new there except you’re possibly buying nothing for nothing as opposed to something for nothing. Wordle is apparently a word game that as an English major I guess I should love, except that working out the solution to a pointless quiz is no match for actual reading, which is something most Americans know about only from books and have never actually attempted themselves.

Moving on to viscount, I chalk it up to fans of the TV show “Bridgerton,” the insanely popular soap opera that rips off actual literature and gets away with it because, again, nobody reads. So it appears our broke, violence-addicted, bored Americans still don’t understand the royal hierarchy that well. (Which is fine ... I mean, who gives a shit?)

Shifting gears to the “why” question, the first one (“why is the sky blue”) is the same Autocomplete suggestion as four years ago, and “why is my poop green” moved from fourth place to third. Here are new suggestions for 2022:

- Why is russia invading ukraine

- Why is my eye twitching

- Why is gas so expensive

- Why is my poop black

- Why is russia so big

Let’s consider the top answer to the first common question, “Why is the sky blue.” Four years ago, the first response was, “Because it’s a beautiful day.” Today, the top answer is, “The sky is blue due to a phenomenon called Raleigh scattering, which is the scattering of electromagnetic radiation (of which light is a form) by particles of a much smaller wavelength.” So we can conclude that in the last four years, the Internet authorities have lost their sense of humor.

As far as the other questions, since these are what’s on people’s minds, I’ll save you a Google search (and the pruning invariably required) and give you some quick resources so you don’t have to sound completely clueless should these come up in conversation.

Regarding the question of why Russia has invaded Ukraine, this article is pretty useful, and states, “The Russian leader's initial aim was to overrun Ukraine and depose its government, ending for good its desire to join the Western defensive alliance Nato… Beyond his military goals, President Putin's broader demand is to ensure Ukraine’s future neutrality.” I think my own explanation, “Because Russia is led by a blind idiot autocrat,” is probably a similarly useful response for most American audiences, with “commie” and “pinko” available as garnish depending on your bent.

A guide to poop color and what it could mean is here. And some eye-twitching causes are here. I’ve had some trouble with eyelid twitching myself, which I associated with stress. Fortunately, the Atlantic Ocean separates my home from Russia, so my eyelids are behaving at present. (Let’s not get into my poop color other than to say it’s boring.)

The high cost of gasoline is nicely summarized in this article, which states, “Oil and gas are global commodities, subject to the whims of notoriously volatile global markets. Their prices are particularly sensitive to geopolitical events and investor sentiment… In fact, in this case, sky-high gas prices came from a confluence of events. As COVID-19 restrictions eased and economies rebounded rapidly, demand for oil spiked. Pandemic-related supply chain disruptions exacerbated the situation... Then, more recently and perhaps most saliently, [oil markets were roiled by] Russia [and its invasion of Ukraine].”

And why is Russia so big? This short video sums it up pretty handily: Russia was able to conquer Siberia, which comprises 75% of its land, because there wasn’t much opposition, it being “a cold land with only one guy per square kilometer in that time.”

Moving on to “who is,” we get a sense of whom Americans are wondering about right now. Four years ago, the top Autofill suggestion was “Snoke” (some “Star Wars” character now forgotten entirely), with Jesus taking second and third place, followed by “the richest person in the world” and then Marshmello, some music producer. All have fallen of the suggestion list except for “richest person” which moved up to first place, solidifying our sense that Americans are broke and (possibly as a result) have lost their religion. Here are the next four suggestions:

- Who is julia fox

- Who is will smith’s wife

- Who is moon knight

- Who is in the super bowl 2022

- Who is joe rogan

Julia Fox, not surprisingly, is an actress, and I guess she’s a popular search because of her weird Oscar after-party dress and its matching handbag, made out of human hair. Will Smith’s wife is of course another Oscar-themed question, and I’d like to point out that while she (famously) lacks hair, Julia didn’t steal it.

Moon Knight is some stupid comic book character, so our lawless, violent, broke, godless, and celebrity-obsessed modern Americans have officially regressed to childishness as well. I guess I shouldn’t be surprised that they’ve already forgotten about this year’s Super Bowl, which was last month.

Rounding out this search topic, we get Joe Rogan, who I gather is mainly famous, lately, for a Spotify controversy where Neil Young yanked all his music from the platform to protest their hosting of Rogan’s podcast. There’s a nice synopsis here. Rogan is a major self-taught pundit, who started out in stand-up comedy as opposed to actual journalism. He is also, as far as I can tell, an idiot. But you shouldn’t take my word for it … I’ve never heard his podcast. (If you decide to check it out, don’t report back because I don’t care.)

Now it’s time for the “where” question. For the second year running, top honors go to “where’s my refund,” which showcases the desperation of broke Americans … they actually hope Google has so thoroughly mined their personal data that they can even pinpoint what part of the IRS machinery is currently processing the searcher’s tax return. And, just as we saw four years ago, the second most popular search is “where am I.” So instead of looking around him, perhaps even out a window, the average American is outsourcing his knowledge of his own whereabouts to Google Location Services. Pretty sad.

But now we get a big surprise: the third most popular “where” search is Where the Crawdads Sing, a book. I’ve never seen a book in Autocomplete before, so I’m going to cry bullshit and declare this a paid result. Rounding out the top five we have “where is the super bowl this year” (see above—totally irrelevant question), and “where can I watch euphoria.” Evidently “Euphoria” is a TV show about sexy young drug addicts … just the thing to cheer up us broke, violence-obsessed, stunted, confused, green-pooping, and godless Americans as we (hopefully) come out of the COVID-19 pandemic.

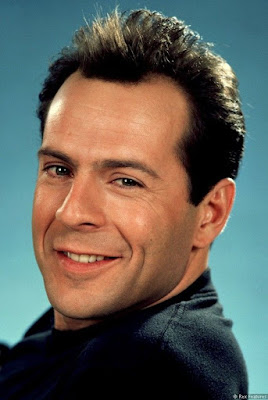

Let’s look at “how.” What are Americans trying to learn how to do in 2022? No longer are we seeking to delete Facebook (perhaps we’ve either given up trying, or succeeded long ago), or trying to buy Bitcoin (it’s far too expensive now that we’re broke), or learning how to tie a tie (since nobody dresses for success anymore, the COVID-driven shift to teleworking having dealt a fatal blow to dress codes nationwide). We’re still ignorant of weights and measures (the #1 suggestion being “how many ounces in a cup” and #5 being ounces in a gallon), clueless in general (#3 being “how many weeks in a year”—are you kidding me?!) but now we suddenly want to know “how to screenshot on mac” which seems incredibly basic (though, factually, I don’t know the answer, but that’s only because I haven’t used a Mac since 1985). Rounding out the top five, Americans suddenly want to know “how old is bruce willis.” This is because his daughter announced yesterday that he’s retired from acting due to a diagnosis of aphasia. I happen to know what that is, but am suddenly surprised that it didn’t come up in my survey of “what is” Autocomplete suggestions. So I tried again and guess what? “What is aphasia” is suddenly #1, Google being as nimble as ever … and alopecia has dropped from the first page of results. (Even though Bruce Willis is famously bald, it’s not because of alopecia. Dang … remember when he had hair? Back then, I had hair too!)

But let’s stop dwelling on the past.

The future

Where are we, as Americans, headed? And how are we feeling about that? To find out, I googled “am I going” and compared the suggestions to 2018 when we were concerned with “am I going crazy,” “am I going to die,” “am I going to hell,” “am I going bald,” and “am I going to die alone.” I see several of these repeated, but new for 2022 are “am I going blind” (probably a result of how glued to our screens we’ve been during the pandemic), “am I going to be okay” (perhaps a hint of optimism creeping through the doldrums?), and “am I going through menopause” (which … I don’t know, might suggest the changing demographics of Internet users?).

Switching to “will I ever,” six of the top eight results (will I ever find love again, find love, get married, be happy, find true love, get over my ex) are the same as four years ago, with only two new Autocomplete suggestions: “will I ever be able to buy a house” (reflects what we already knew—Americans are broke) and “will I ever get a ps5.” I guess PlayStation is seen as the last best hope for entertaining ourselves now that we’ve forgotten how to venture outside our homes.

And now, in keeping with my zeitgeist investigation from four years ago, we move the final query, which abandons Google in favor of the Magic 8-Ball. I posed the question “will I ever be happy again” to www.ask8ball.net, and it responded, “It is decidedly so.” I think that’s pretty encouraging, given that I’m surrounded by a cohort of broke, clueless, violence-obsessed scofflaws who drive too fast without licenses, produce green or black feces, and are as worried about Hollywood celebrities as they are about world events.

(I’m just playin’, America. You know I love you.)

—~—~—~—~—~—~—~—~—

Email me here. For a complete index of albertnet posts,

click here.